Edge computing gets things done faster and more efficiently, and when it entered the world of computer vision, it changed it for the better. Now, the marriage between the two technologies has resulted in new types of software that were mere ideas before. This article introduces you to both edge computing and computer vision and discusses the benefits of merging both and the resulting applications. The article introduces the concept of edge computer vision for both technical and non-technical readers.

What is Edge Computing?

Edge computing is an emerging technology where the data is closer to the device processing it. So, the computer and the smartphone are edge devices that collect and process data in the physical world. The distance between the computer and the data is reduced because the computer is at the edge of the data.

Benefits of Edge Computing

- It reduces latency because the data is close to the source or the device. On the other hand, when the devices function with centralized hosting platforms, the servers could be slow if the internet connection is facing issues.

- While desktop mfa or device mfa is a critical infosec solution for organizations, edge computing reduces data theft because there is less data to be collected by hackers. On the other hand, a centralized hosting platform has lots of data that can be compromised when there’s a security threat.

- It saves money since companies won’t need to pay extra operational costs to increase the bandwidth that handles the data load.

- Since the data is stored within one place, it’s easier to comply with privacy and regulatory requirements.

- Edge computing makes data fetching more reliable because it doesn’t depend on internet connectivity.

- It supports Artificial Intelligence and Machine Learning AI/ML because they require a lot of processing of large volumes of data.

Computer Vision

Did you find it odd that Google Photos on your Android phone can tell if there’s a cat in the picture? If you haven’t tried it, search for the word “cat” and see what comes up. This is a reality because computer vision, an AI field, allows computers to see, observe, and understand images.

The cat example is just one basic application of computer vision. It has many others, like security monitoring, automotive defect detection, facial recognition, and more. The market continues to grow because it’s much faster at processing images than the human eye.

How Computer Vision Works

Let’s resume the cat example. How does the application recognize the cat? In a human case, we identify cats because we have seen them. A child is shown a picture of the cat before seeing it in many cases. A human mind can detect a cat after seeing one picture.

In the case of computers, they are fed enormous amounts of data before they can see the pictures with human eyes. Think of a thousand pictures, at least. They don’t think there’s a cat in the picture because it has pointy ears and fur. They recognize it because they have seen it a lot before. Although it takes a computer thousands of images to recognize cats, they can still do it much faster than humans.

Now let’s get a bit technical and ditch the cat example. Let’s discover how does computer vision work.

Computer vision works with deep learning, also known as artificial neural networks, in addition to machine learning. Deep learning differs from machine learning because it relies on the human brain as a model, while machine learning relies on data. Both machine learning and deep learning get the help of convolutional neural networks to see the picture as humans.

Briefly, convolutional neural networks break down visuals and tag them so the machine can tell the difference between one state of an object and another. This way, the computer can tell if a product is defective, for instance.

Edge Computing for Computer Vision

As we mentioned, edge computing allows for speedy data processing, and computer vision relies on an enormous volume of data. So combined, edge computing enables computer vision to work faster and more efficiently, with less required bandwidth and more security due to real-time processing. By utilizing computer vision software development services, businesses can optimize these technologies to achieve superior performance and integration. And for sure, there will be less latency as the device wouldn’t need a strong internet connection.

Benefits of Edge Computer Vision

Edge Computing enhances four computer vision techniques:

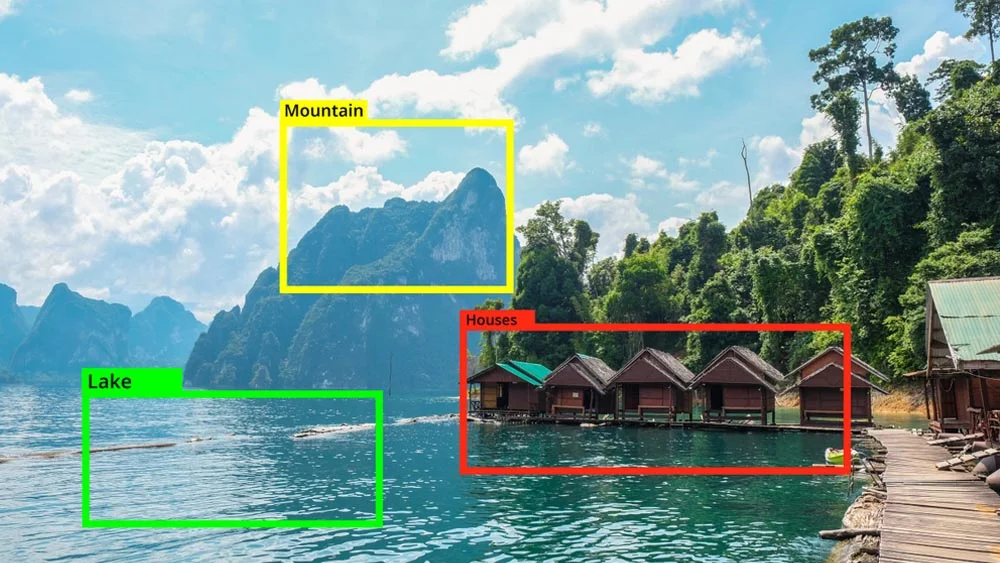

1. Object Detection

Object Detection is concerned with detecting objects in a photo and recognizing their location. Thanks to edge computing, this can happen now on a local device, such as a camera or a phone, rather than a cloud processing platform. This is helpful with security cameras, as they can locate people or objects, such as trespassers or lost children, in specific locations.

2. Image Classification

Image classification analyzes an image’s features, visuals, and patterns to classify them into predefined categories. Like object detection, now devices can do that even with poor internet connection.

This can be helpful in medical imaging, autonomous driving, and quality control. In the case of Medical imaging, it helps in fast diagnosis and treatment. In autonomous driving, the vehicles can detect pedestrians and other cars to prevent collisions. And it can also detect defects in products, which can reduce the need for human eyes.

3. Feature Extraction

An early phase in the computer vision pipeline, feature extraction involves extracting relevant information from an image to analyze it subsequently. The difference between it and image classification is that the former identifies the important or relevant features that image classification uses to categorize the image. Because edge computing enables local processing, feature extraction happens faster with less latency and reduced bandwidth consumption.

4. Anomaly Detection

Anomaly detection is the technique of analyzing datasets in an image and detecting unusual or unexpected events or patterns. It helps to identify outliers, novelties, and other deviating data types. Errors, fraud, broken equipment, and security breaches are just a few potential causes of anomalies.

Naturally, it’s faster and more efficient to detect anomalies with local processing than cloud-based servers. With edge computer vision, it’s easier for companies to detect threats and prevent accidents, in addition to improving the efficiency of any device.

Edge computer vision is one of the new technologies that are revolutionizing the tech industry. Another technology that comes to mind is no-coding. No-coding app-building platforms let users create apps without writing code. One of the best app builders on the market is the nandbox app builder, which is distinguishable from other app builders because the apps created are fully native Android and iOS apps. Sign up and try it now!