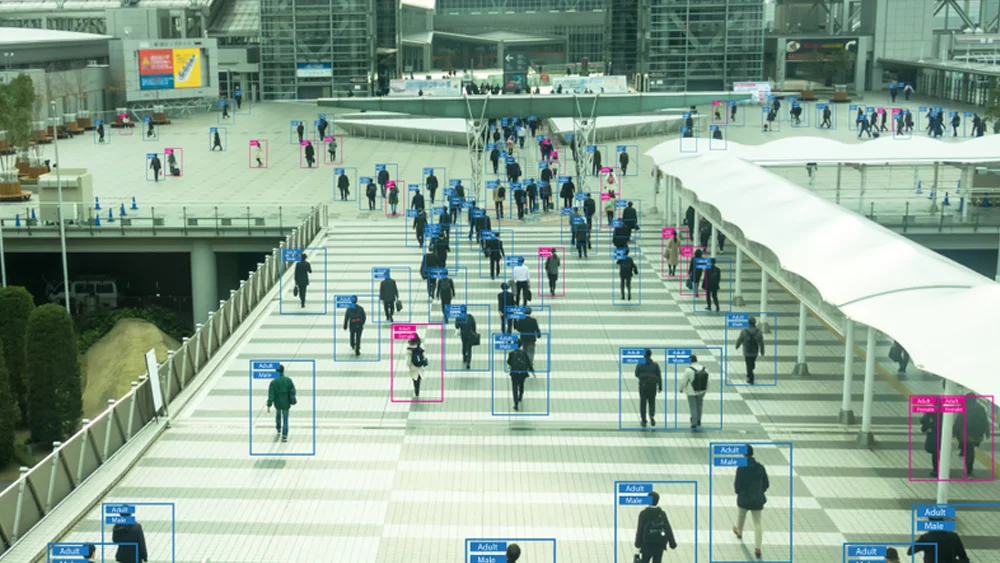

Object detection and localization are key capabilities in computer vision that enable systems to identify and locate objects within images or videos. These techniques play a critical role in a wide range of applications, including self-driving vehicles, surveillance systems, medical imaging analysis, robotics, and augmented reality.

At a high level, object detection involves identifying instances of objects from a known set of classes, such as people, cars, or animals. Object localization goes a step further to determine the precise location of objects within the image, usually in the form of bounding box coordinates. Together, detection and localization enable a computer vision system to not just classify what objects are present, but also pinpoint where they are in the visual scene.

The ability to reliably detect and localize objects has become an essential requirement for many computer vision systems operating in the real world. Self-driving cars need to detect other vehicles, pedestrians, and road signs, while augmented reality services rely on detecting surfaces and objects to overlay digital content. In this context, having a robust computer vision solution is crucial. Medical image analysis requires identifying organs or regions of interest. Object detection and localization serve as critical enablers for these and many other applications across industries.

Challenges and Limitations

Object detection and localization face several key challenges that limit performance in real-world applications:

Occlusion:

Objects in images and videos are often partially obstructed by other objects. Occlusion makes it difficult to identify the full object and localize its exact position, especially when large portions are hidden. Methods to handle occlusion remain an open research problem.

Lighting Conditions:

Changes in lighting, shadows, brightness, and contrast can alter the appearance of objects, creating challenges for detection algorithms dependent on color, texture, and shape features. Performance often degrades under suboptimal lighting. Approaches like data augmentation help improve robustness.

Orientation:

Real objects are captured from different viewpoints and orientations. Many algorithms are sensitive to rotated or tilted objects. Rotation invariance remains difficult, though techniques like spatial pyramids help improve orientation robustness.

Computational Complexity:

state-of-the-art deep learning models have high computational requirements, making real-time inference difficult on hardware-constrained devices. Model optimization, compression, and efficient architectures help improve speed, but more breakthroughs are needed for low-power applications.

Other Issues:

factors like motion blur, low resolution, small objects, background clutter, diversity of objects, and similarity between classes also pose challenges and hurt performance. Continued research is still needed to handle these and build more generalized, robust systems.

Common Approaches

Object detection algorithms typically fall into one of three main categories: sliding window detectors, region-based convolutional neural networks (CNNs), and single-shot detectors.

Sliding Window Detectors

Early object detectors relied on the sliding window technique. This involves taking a classifier for an object and sliding it across the image, extracting positive and negative patches at different locations and scales. The classifier is applied at each window location to determine if the target object is present. This approach was popularized by detectors like Viola-Jones for faces and HOG for pedestrians. However, sliding window methods are computationally expensive since the classifier must be run thousands of times per image.

Region-Based CNNs

More recent state-of-the-art detectors use region proposal networks within a CNN pipeline. These generate potential object regions or “proposals” so that the CNN only needs to evaluate a small subset of regions per image rather than exhaustively search across all locations and scales. Popular examples include R-CNN, Fast R-CNN, and Faster R-CNN. These region-based CNNs offer significantly better accuracy over sliding window approaches. However, they have high computational requirements during training and inference.

Single-Shot Detectors

Single-shot detectors were introduced to improve the speed and efficiency of region-based CNNs. As the name implies, single-shot detectors perform object localization and classification in one pass through the network. This avoids the need for separate region proposals and classification steps. Leading single-shot detectors include SSD and YOLO variants. While generally faster, they trade off some accuracy compared to region-based methods.

When designing a modern object detection system, the choice of approach involves balancing tradeoffs between accuracy, speed, and complexity. But CNN-based detectors now clearly dominate over traditional sliding window methods.

Key Algorithms

Region-Based CNN (R-CNN)

A pioneering algorithm for object detection, published in 2013. R-CNN uses regions of interest generated from a selective search algorithm and classifies each region using a convolutional neural network. Despite the groundbreaking performance, R-CNN was slow due to the region proposal method and repeated forward passes through the CNN to classify the many region proposals.

Fast R-CNN

Introduced by Ross Girshick in 2015, Fast R-CNN improves speed by orders of magnitude compared to R-CNN. The key difference is that it processes the entire image with the CNN once to generate a convolutional feature map. Regions of interest are identified on the feature map and classified by additional fully-connected layers. This enables nearly cost-free region proposals once the image is passed through CNN.

Faster R-CNN

Proposed by Shaoqing Ren in 2015, Faster R-CNN replaces the selective search algorithm with a Region Proposal Network (RPN). The RPN shares convolutional layers with the detection network, allowing nearly cost-free region proposals like Fast R-CNN. The RPN improved both speed and accuracy over Fast R-CNN.

You Only Look Once (YOLO)

YOLO is a single-shot detector proposed in 2016 by Joseph Redmon. It treats object detection as a regression problem to spatially separated bounding boxes and class probabilities. A single neural network predicts bounding boxes and class probabilities directly from full images in one evaluation. This makes YOLO extremely fast, but it sacrifices some accuracy.

Single Shot Multibox Detector (SSD)

SSD was introduced in 2016 by Wei Liu. It uses a single feedforward convolutional network to directly predict classes and anchor offsets without requiring a secondary regional proposal network. This results in a simple and fast architecture that achieves accuracy comparable to state-of-the-art models like Faster R-CNN while running significantly faster.

The key algorithms section provides an overview of seminal object detection models that highlight the evolution of the field. It focuses on the speed and accuracy tradeoffs between region proposal-based methods like R-CNN, Fast R-CNN, and Faster R-CNN versus single-shot detectors like YOLO and SSD. This gives readers context on the key innovations that have driven progress in object detection.

Performance Evaluation

Evaluating the performance of object detection systems is critical to understanding their capabilities and limitations in real-world use cases. There are several key metrics commonly used to quantify object detection performance:

- Precision measures the fraction of detected objects that are correct. For example, if a model detects 100 objects and 90 of them are correct detections, then its precision is 90%. High precision relates to a lower false positive rate.

- Recall measures the fraction of actual objects that are correctly detected. So if there are 200 objects in an image and the model detects 90 of them, its recall is 45%. Higher recall means the model can successfully detect more of the total objects present.

- mAP (mean Average Precision) is the primary metric used to evaluate overall object detection performance on large data sets. AP summarizes the precision-recall curve for a particular class into a single value. mAP is the mean of AP values across all classes, providing a global metric across class performance. Typical mAP values range from 70–90% for leading algorithms on benchmark data sets.

- FPS (Frames Per Second) measures inference speed. Real-time applications often require a minimum FPS threshold. There is generally a precision-speed tradeoff, with faster models being less accurate. State-of-the-art detectors achieve 20–30 FPS on high-resolution images.

By evaluating these metrics during model development, researchers can better understand the detection capabilities of real-world data sets and applications. Improvements in both accuracy and speed are critical to enabling the widespread adoption of object detection systems.

Data Sets

Several key data sets have driven research and advancements in object detection. These curated data sets provide the training and test images needed to develop and benchmark computer vision systems. To maximize the effectiveness of these data sets, collaborating with experts in computer vision development services can help in creating robust and accurate systems tailored to your business needs.

MNIST

The MNIST database contains 70,000 grayscale images of handwritten digits. Each image is 28×28 pixels, and digits 0–9 are represented. MNIST is considered a “hello world” data set for computer vision, used for training basic image classification models. However, it lacks the complexity needed for rigorous object detection research.

COCO

The Microsoft Common Objects in Context (COCO) data set contains over 200,000 labeled images depicting complex everyday scenes with common objects in their natural context. It has over 80 object categories like people, animals, household items, and vehicles. COCO is a preferred choice for pushing object detection research.

ImageNet

ImageNet contains over 14 million images categorized into ~22,000 noun classes. While it is not specifically curated for object detection, it provides ample labeled training data across a vast array of objects. ImageNet was instrumental in advancing the state-of-the-art in object classification.

PASCAL VOC

The PASCAL Visual Object Classes (VOC) data set has historically been the most widely used for object detection research. Versions since 2005 contain ~10,000 images with bounding boxes and labels spanning 20 object classes like people, vehicles, and household objects. Its relatively small size allows faster training times compared to modern data sets.

Real-World Applications

Object detection and localization have become indispensable in many real-world computer vision applications today. Some notable examples include:

Autonomous Vehicles

Self-driving cars rely heavily on object detection to understand their surroundings. The vehicles use object detection algorithms to identify other cars, pedestrians, traffic signals, road signs, and more. By determining the locations of these objects, the autonomous vehicle can better navigate itself safely through complex environments. Object detection enables features like automatic emergency braking, lane departure warning, and adaptive cruise control in self-driving cars.

Surveillance

Object detection is used extensively in video surveillance systems to monitor areas and detect intruders, threats, or irregular activities. Specific objects like people, cars, and luggage can be recognized to provide information about who or what is present in a scene. Object detection algorithms allow surveillance cameras to track objects of interest and raise alerts when needed.

Image Search

Image search engines like Google Images use object detection to analyze the content of images and determine which images match a user’s query. For example, if a user searches for “dog”, the algorithm will identify all images containing dogs by detecting this object within the images. Object localization further improves image search by pinpointing where the object is found in the image.

Latest Advancements

The field of object detection continues to make rapid progress, with improvements in 3D object detection, video object detection, and speed and accuracy. Some key recent advancements include:

3D Object Detection

3D object detection has become an important area of research. Lidar sensors and stereo cameras can provide depth information to detect objects in 3D space. This allows for more accurate localization and orientation estimates. Some examples are Vote3Deep, PointPillars, and MVX-Net.

Video Object Detection

Video object detection aims to identify objects across frames in a video stream. This is more challenging than image detection due to motion blur and occlusions. Some approaches use optical flow to propagate detections across frames. Other methods incorporate temporal modeling via recurrent neural networks. Examples include Tubelet Proposals and MANet.

Two-Stage Detectors

Two-stage detectors like Faster R-CNN produce highly accurate detections but have high latency due to region proposal generation. One-stage detectors like YOLO and SSD sacrifice some accuracy for real-time performance by eliminating the region proposal step. Improvements to one-stage detectors like RetinaNet, EfficientDet, and NanoDet have narrowed the accuracy gap while maintaining speed.

Efficient Neural Network Architectures

Efficient neural network architectures, such as MobileNets and EfficientNets, use techniques like depthwise separable convolutions to build smaller, faster models. This enables object detection on mobile and embedded devices. Model compression techniques like network pruning and quantization also improve efficiency.

Self-Supervised Learning and Semi-Supervised Learning

Self-supervised learning and semi-supervised learning methods that can learn from unlabeled or weakly labeled data have emerged as ways to reduce annotation requirements and improve generalization. Examples include contrastive learning and pseudo-labeling.

Synthetic Data Generation

Synthetic data generation through rendering pipelines and generative adversarial networks is also being explored to augment training data. This can help improve robustness.

Overall, the field continues to push the boundaries of what is possible in terms of accuracy, speed, and capability to address new and evolving real-world use cases. Advances in deep learning, model efficiency, and data augmentation will open up even more applications going forward.

Future Outlook

Object detection and localization have come a long way in recent years, but there are still many opportunities for future improvements and research. Here are some of the key areas where we may see advances in the coming years:

Potential Improvements

- Increased accuracy, especially for small, occluded, and unusual objects

- Faster detection and localization, moving closer to real-time performance

- Ability to detect and localize a larger number of classes and finer-grained categories

- More efficient models with lower computational requirements

- Performance improvements on video data and webcam streams

Challenges Still to Be Addressed

- Robustness to lighting conditions, weather, motion blur, and other environmental variables

- Handling occlusion, especially when large percentages of objects are hidden

- Scaling up to detect thousands or millions of object categories

- Deploying models effectively on embedded systems and edge devices

Promising Research Directions

- Self-supervised and unsupervised learning to reduce annotation requirements

- Leveraging synthetic data to expand available training datasets

- Combining computer vision with other modalities like radar and lidar

- Exploring transformer architectures as an alternative to CNNs

- Developing benchmarks and competitions focused on real-world applications

In the coming years, we can expect continued innovation in object detection and localization algorithms, network architectures, and training techniques. The key goals will be improving accuracy, speed, scalability, and robustness to deploy these systems in real-world environments. Exciting times lie ahead as computer vision continues to advance.